The Hidden Risk Behind the Code: Why AI Bias in Healthcare Demands Urgent Attention

Artificial intelligence (AI) is rapidly transforming modern medicine, offering tools that promise increased diagnostic accuracy, faster treatment plans, and streamlined administrative tasks. From radiology to patient triage, machine learning algorithms now play a central role in clinical decision-making. However, amid the excitement lies an increasingly scrutinized issue: AI bias in healthcare. This bias, often invisible in code but glaring in outcome, threatens to amplify existing health disparities if left unaddressed. As AI systems are trained on historical data, they may inadvertently perpetuate the very inequalities healthcare seeks to overcome. The importance of recognizing and mitigating this bias cannot be overstated, as it has real consequences for patients, especially those from historically marginalized groups.

You may also like: Revolutionizing Healthcare: How AI in Medicine Is Enhancing Diagnosis, Treatment, and Patient Outcomes

Addressing AI bias in healthcare is more than a technical challenge—it is a moral imperative. Real-world examples have already demonstrated the risks, from skewed diagnostic tools to inequitable treatment prioritization. Tackling these issues requires not only technical adjustments but also a broader cultural shift in how healthcare innovation is conceptualized and regulated. This article delves deep into the mechanisms, manifestations, and mitigation strategies for AI bias in healthcare, offering an in-depth examination of real-world examples and the ethical reforms that are urgently needed. In doing so, it applies the principles of Experience, Expertise, Authoritativeness, and Trustworthiness (EEAT) to ensure accuracy, clarity, and credibility throughout.

Understanding the Root Causes of AI Bias in Healthcare

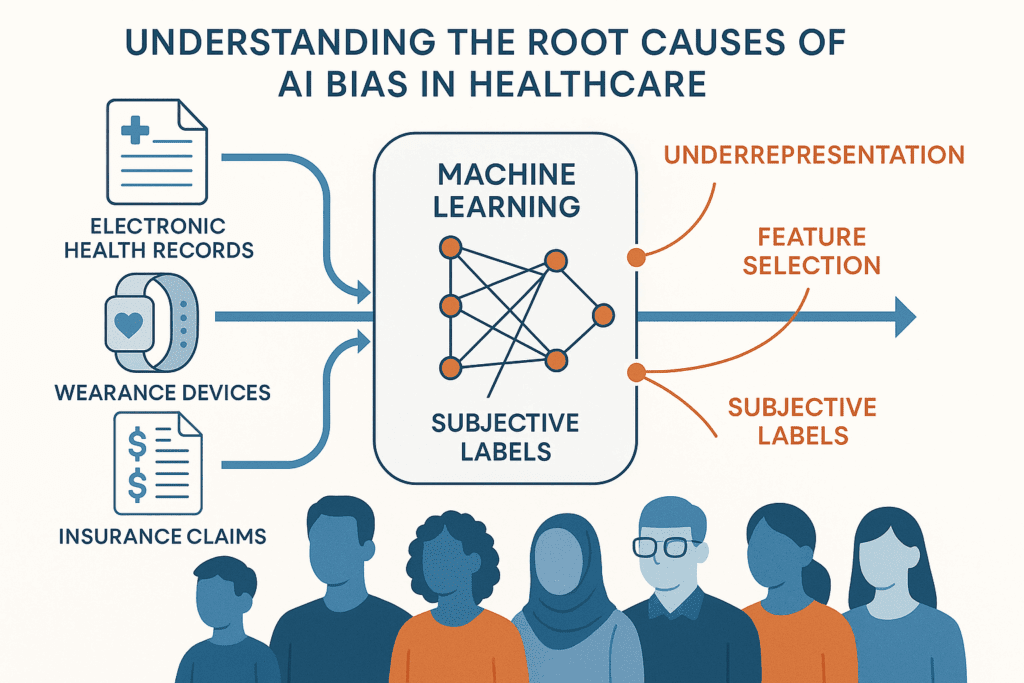

To understand how AI bias in healthcare emerges, it is crucial to first examine how machine learning models are built. These systems rely on vast datasets, often culled from electronic health records (EHRs), clinical trials, insurance claims, or wearable device outputs. While these data sources offer incredible insights, they are also deeply reflective of existing societal and systemic biases. If minority populations are underrepresented in medical datasets, or if historical healthcare decisions were influenced by racial, gender, or socioeconomic prejudice, the AI model will internalize those patterns.

One of the most common causes of bias is skewed training data. For instance, if a dataset contains fewer examples of diseases in certain demographic groups, the algorithm may struggle to correctly diagnose conditions in those populations. Furthermore, biases can be introduced during feature selection—when developers decide which variables to include. Socioeconomic status, zip code, or insurance coverage may serve as proxies for race or other protected characteristics, potentially skewing the algorithm’s behavior.

Another source of bias stems from label bias, which occurs when the outcomes used to train AI are themselves influenced by subjective human judgment. For example, if historical medical decisions favored certain racial groups over others, an AI trained on that data may learn to replicate those disparities. Additionally, the context in which data is collected can introduce observational bias. Consider wearable devices: if these are predominantly worn by affluent individuals, AI models trained on their data may not generalize well to other populations.

The underlying mathematical structures of AI models can also inadvertently contribute to bias. Optimizing for average performance may mask poor results for minority groups. Unless models are explicitly designed to account for subgroup performance, they can easily privilege the majority population. This issue has profound implications, especially in high-stakes healthcare settings where equitable care is essential.

Real-World Examples of AI Bias in Healthcare Settings

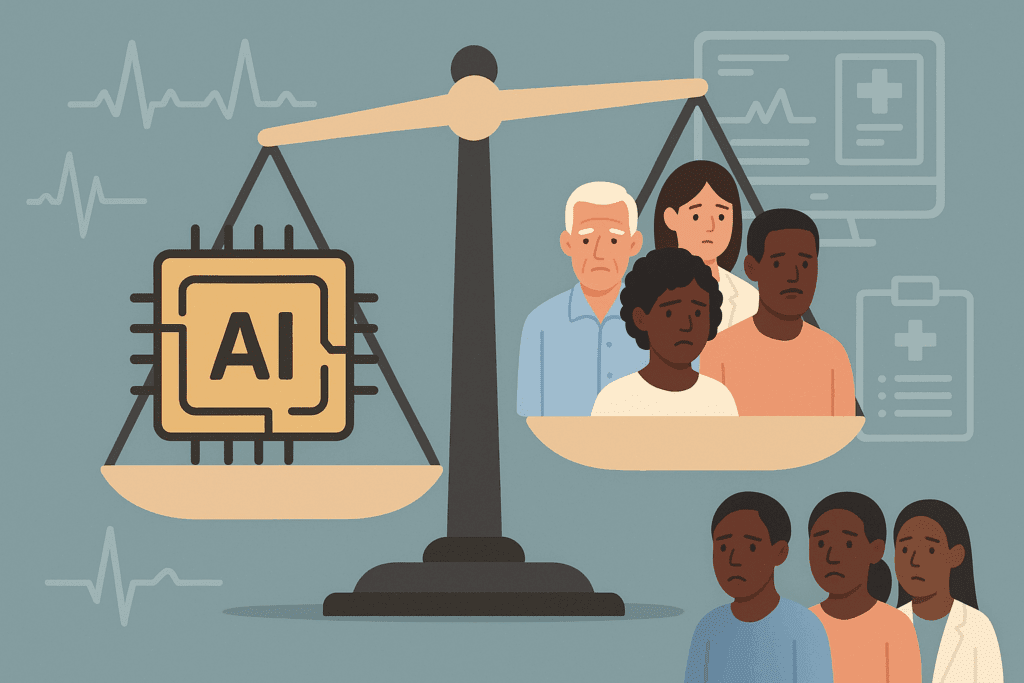

Instances of AI bias in healthcare are no longer theoretical—they have been documented in real clinical environments. Perhaps the most well-known example is the 2019 study published in Science, which found that a widely used commercial algorithm was systematically underestimating the health needs of Black patients compared to white patients. The algorithm, used to identify patients for enrollment in high-risk care management programs, relied on healthcare spending as a proxy for health needs. Because Black patients typically spent less on healthcare—often due to access barriers—the algorithm falsely inferred they were healthier, thereby denying them crucial services.

Another telling case involves skin cancer detection algorithms. Many of these models are trained predominantly on images of lighter skin tones, rendering them significantly less effective at diagnosing conditions on darker skin. This discrepancy can delay diagnoses for people of color, resulting in worse outcomes and undermining trust in medical AI systems.

AI bias in healthcare examples are also evident in maternal care. Algorithms used to predict complications in pregnancy have been found to perform poorly for non-white patients. In some cases, risk scores underestimated the likelihood of adverse events among Black and Hispanic women, potentially delaying critical interventions. Such failures are not just technical—they are deeply human, as they affect the lives and health outcomes of vulnerable mothers and their babies.

Further, natural language processing (NLP) tools used to analyze physician notes have demonstrated racially biased interpretations. One study found that the language associated with Black patients in medical notes was more likely to contain negative descriptors, which can influence future treatment decisions when these notes are parsed by AI tools. These biases perpetuate harmful stereotypes, reinforcing disparities instead of dismantling them.

Even predictive tools used for hospital admissions and readmissions have exhibited bias. Models predicting the likelihood of readmission often use past hospital utilization as a key input. However, communities with historically limited access to hospitals may have lower utilization not because of better health but due to systemic barriers. These flawed assumptions can lead to under-prioritization of care for already underserved groups.

Ethical Implications and the Need for Transparency

The ethical implications of AI bias in healthcare are profound. Healthcare is grounded in the principle of “do no harm,” yet biased algorithms risk violating this foundational ethic by reinforcing inequities. Transparency in AI model development and deployment is critical to upholding trust and accountability. Patients, providers, and regulators must understand how algorithms function, what data they are trained on, and how decisions are made.

A lack of explainability in AI systems can obscure errors and mask discriminatory outcomes. When a model provides a recommendation without a clear rationale, it becomes difficult for clinicians to interrogate its validity or to identify potential bias. This “black box” problem poses a significant ethical dilemma. As AI becomes more embedded in clinical workflows, ensuring transparency must be a priority.

Another pressing ethical concern is informed consent. Patients may not be aware that their data is being used to train or power AI systems, and they certainly may not understand the implications of biased algorithms. Ethical AI development should include robust consent mechanisms and clear communication about how data will be used.

The deployment of biased AI tools also has legal ramifications. Discriminatory algorithms may violate civil rights laws, particularly if they result in unequal access to healthcare services based on race, gender, or other protected categories. Regulatory frameworks must evolve to hold developers and healthcare providers accountable for AI-driven discrimination.

The ethics of AI bias in healthcare cannot be fully addressed without tackling structural inequities. Technology cannot be divorced from the social context in which it operates. If the goal is to create ethical and effective AI tools, then healthcare systems must invest in broader reforms that address access, quality, and equity.

Strategies for Identifying and Mitigating Bias in Healthcare AI

Tackling AI bias in healthcare requires a multipronged approach. First, diversity in data is essential. Developers must proactively seek out datasets that are representative of the populations they aim to serve. This means including diverse demographics across race, ethnicity, gender, age, and socioeconomic status. Institutions should prioritize the collection of granular, high-quality data that captures these dimensions.

Beyond data collection, bias detection tools must become standard practice in AI development. Researchers have begun designing fairness metrics that evaluate how well a model performs across subgroups. For example, demographic parity, equalized odds, and disparate impact are all statistical methods used to assess bias. These tools should be incorporated into every stage of model development—from training to validation to deployment.

Algorithmic auditing is another vital strategy. Independent audits, conducted by third-party experts, can uncover hidden biases that internal teams may overlook. These audits should be routine and mandatory, with transparent reporting of findings. Just as clinical trials are subject to rigorous oversight, so too should AI tools that impact patient care.

Developers must also adopt design practices that prioritize equity. This includes reweighting training data, adjusting loss functions to penalize biased outcomes, and developing algorithms that explicitly account for subgroup performance. Participatory design, which involves input from diverse stakeholders—including patients and frontline clinicians—can help ensure that AI tools align with real-world needs and values.

Finally, healthcare institutions must foster a culture of accountability and ethics. This means establishing governance frameworks, ethical review boards, and interdisciplinary teams that oversee AI projects. Education and training programs can equip healthcare professionals with the skills needed to critically assess AI tools and advocate for their patients.

The Role of Regulation and Policy in Ensuring Fairness

Government and regulatory bodies play a crucial role in addressing AI bias in healthcare. Existing frameworks, such as the Health Insurance Portability and Accountability Act (HIPAA), primarily focus on privacy and security, not fairness. There is a growing consensus that new regulations are needed to specifically target algorithmic bias.

One proposed model is the FDA’s Software as a Medical Device (SaMD) framework, which provides guidance on evaluating the safety and effectiveness of AI tools. While a step in the right direction, this framework must be expanded to include fairness assessments. Regulatory approval should depend not only on clinical efficacy but also on equitable performance across diverse populations.

Policymakers can also mandate transparency through algorithmic impact assessments. These assessments would require developers to document the potential social and ethical risks of their models before deployment. Similar to environmental impact statements, these tools would promote accountability and public awareness.

Standard-setting organizations, such as the National Institute of Standards and Technology (NIST), are beginning to develop benchmarks for trustworthy AI. These guidelines can help harmonize efforts across industry, academia, and government. However, voluntary standards alone are insufficient. Enforceable policies are necessary to prevent harm and incentivize responsible innovation.

International collaboration will also be key. Health systems around the world are grappling with similar challenges, and cross-border cooperation can accelerate the development of global standards. Ethical AI in healthcare is not just a national issue—it is a global imperative.

The Future of Ethical Innovation in Medical AI

Despite the challenges, the future of AI in healthcare holds great promise—if guided by ethical innovation. Emerging trends such as federated learning, which allows models to be trained on decentralized data without sharing patient information, offer a promising path toward inclusive and privacy-preserving AI. Likewise, explainable AI (XAI) is gaining traction as a means of making model decisions more transparent and understandable.

Interdisciplinary collaboration will be essential for ethical progress. Engineers, clinicians, ethicists, sociologists, and patient advocates must work together to ensure that AI tools reflect diverse perspectives. Medical schools and engineering programs are beginning to offer joint training in AI and ethics, equipping the next generation of professionals with the skills to lead responsibly.

Patient-centered design is another promising avenue. By engaging communities in the design and testing of AI tools, developers can create systems that are not only technically robust but also socially responsive. This approach recognizes patients as active participants in innovation, not passive recipients.

Innovative startups and academic labs are also pioneering tools that explicitly prioritize fairness. For instance, some projects are developing bias-aware models that dynamically adjust predictions based on demographic context. Others are using synthetic data to supplement underrepresented groups in training datasets, thereby improving model generalizability.

Ultimately, the path forward must balance innovation with caution. While AI can undoubtedly enhance healthcare, it must be developed and deployed with a deep commitment to equity, transparency, and patient well-being. Ethical innovation is not an add-on—it is the foundation of responsible AI.

Frequently Asked Questions: AI Bias in Healthcare

1. What are some overlooked factors that contribute to AI bias in healthcare?

Beyond skewed datasets and flawed algorithms, subtle operational practices can intensify AI bias in healthcare. For instance, inconsistent data labeling across institutions often leads to discrepancies that mislead machine learning systems. Differences in healthcare documentation standards between hospitals can result in models favoring patients from well-documented systems, often aligned with wealthier or urban populations. Moreover, administrative decisions such as billing codes may influence algorithmic outputs if used as proxies for health outcomes, which can misrepresent patient severity. These overlooked nuances add to the complexity of identifying true ai bias in healthcare examples, showing that systemic reform must accompany technical solutions.

2. How do patient trust and AI transparency intersect in addressing healthcare bias?

Trust is a cornerstone of effective care, and opaque AI systems risk eroding that trust. When patients are unaware that algorithms guide aspects of their care, especially if those algorithms are flawed, it may lead to distrust in both the technology and the clinicians using it. Transparent AI systems that provide understandable explanations for their decisions can help rebuild confidence. Patients are more likely to engage positively with care when they understand how conclusions are reached—particularly when addressing ai bias in healthcare examples that have disproportionately affected vulnerable groups. Creating transparent interfaces isn’t just a technical priority; it’s a fundamental trust-building exercise rooted in the ethical use of AI.

3. What psychological effects might AI bias have on marginalized patients?

Marginalized individuals already navigating systemic discrimination may feel further devalued when impacted by biased algorithms. Encountering ai bias in healthcare can exacerbate feelings of alienation, helplessness, and mistrust toward medical institutions. These psychological responses are not trivial—they can lead to delayed care, underreporting of symptoms, or complete avoidance of the healthcare system. Long-term exposure to biased treatment can also reinforce internalized stigma, compounding disparities. Recognizing the emotional weight of these experiences is essential in conversations about ai bias in healthcare, as healing involves more than just technological correction—it requires patient-centered empathy.

4. Are there any promising international approaches to mitigating AI bias in healthcare?

Several countries are pioneering proactive frameworks to address AI bias. For example, the United Kingdom’s NHS AI Lab emphasizes inclusivity in dataset collection, ensuring diverse patient representation across regions and ethnicities. In Canada, regulatory bodies have introduced AI assessment protocols that include social impact reviews prior to deployment. Meanwhile, Singapore’s Ministry of Health has piloted explainable AI tools in public hospitals, allowing clinicians to override or question algorithmic outputs. These global initiatives offer scalable models that move beyond documenting ai bias in healthcare examples and instead focus on systemic prevention. Cross-border collaborations could enhance these efforts by sharing lessons and standardizing fairness metrics.

5. How might AI bias influence clinical education and future medical training?

Medical education is evolving to include AI literacy, and awareness of algorithmic bias is becoming a necessary competency. Understanding ai bias in healthcare enables clinicians to critically evaluate the tools they use, rather than accept their outputs unconditionally. Forward-thinking institutions are integrating courses that combine data science with ethics, empowering future physicians to spot discrepancies that might otherwise go unnoticed. As more examples of ai bias in healthcare surface, clinicians will be expected not only to treat patients, but also to question the algorithms guiding their decisions. Embedding this mindset early in training can shape a generation of more vigilant and ethically grounded healthcare providers.

6. Can AI systems be designed to actively counteract existing healthcare disparities?

Yes, AI has the potential not just to perpetuate but to actively reduce disparities—if designed with equity as a core goal. Developers are now experimenting with algorithms that adjust decision thresholds for different population groups, compensating for structural disadvantages in the training data. For instance, predictive tools can be recalibrated to weigh underserved communities more heavily, ensuring fairer care access. Additionally, community-informed design—where underserved populations participate in shaping AI tools—can lead to more culturally sensitive and responsive solutions. In this way, understanding ai bias in healthcare becomes a springboard for creating inclusive, justice-driven innovation rather than a barrier to overcome.

7. What role does health insurance data play in perpetuating AI bias in healthcare?

Insurance claims data, often used to train medical algorithms, are deeply tied to socioeconomic status and access to care. For example, patients with comprehensive insurance tend to have more frequent healthcare interactions, creating richer datasets. Conversely, those with limited coverage may appear healthier in AI outputs simply due to sparse data—not actual wellness. This skew results in ai bias in healthcare examples where underinsured individuals are deprioritized for interventions, as the AI mistakenly assumes lower risk. To counteract this, developers must contextualize insurance data within broader social determinants of health and explore supplementary data sources that reflect the full spectrum of patient experiences.

8. How can patients and the public influence the development of ethical healthcare AI?

Patients have a powerful yet underutilized role in shaping healthcare AI. Public advisory boards, participatory design sessions, and patient feedback loops can all inform the ethical parameters of AI development. When patients—especially those from marginalized communities—share their experiences with ai bias in healthcare examples, developers gain critical insights into model weaknesses and blind spots. Advocacy groups are increasingly pushing for inclusive technology assessments and patient representation in AI ethics committees. Encouraging patient involvement isn’t just a gesture of goodwill—it’s a strategic necessity for building tools that are not only technically sound but socially just.

9. What emerging technologies could help detect and mitigate AI bias more effectively?

Advanced audit tools and bias-detection frameworks are gaining traction in healthcare AI development. Techniques such as adversarial testing—where AI models are challenged with intentionally diverse inputs—can expose hidden vulnerabilities. Tools like IBM’s AI Fairness 360 and Google’s What-If Tool enable developers to simulate how algorithms perform across different demographic groups. Additionally, the rise of federated learning allows AI systems to be trained on decentralized, locally stored data, reducing the risk of demographic imbalance. As the field matures, these innovations could become essential in proactively identifying ai bias in healthcare examples before systems are deployed at scale.

10. What long-term societal impacts could unchecked AI bias in healthcare have?

If left unaddressed, AI bias could institutionalize and scale healthcare disparities on a global level. Widening gaps in diagnosis accuracy, care recommendations, and access to life-saving interventions could disproportionately affect already marginalized groups. This scenario risks creating a two-tiered system where algorithmically favored populations receive superior care while others face systemic neglect. Moreover, persistent ai bias in healthcare examples could erode public confidence in both medicine and technology, delaying the adoption of genuinely beneficial innovations. Addressing bias now isn’t merely about fixing flawed tools—it’s about protecting the ethical integrity and inclusiveness of the future healthcare landscape.

Conclusion: Confronting AI Bias in Healthcare with Purpose and Precision

AI bias in healthcare is a challenge that demands our immediate and sustained attention. The technology is advancing rapidly, but without intentional efforts to address its flaws, it risks exacerbating the very disparities healthcare seeks to eliminate. From biased data inputs to opaque model outputs, the pitfalls are numerous—but so are the opportunities for improvement.

Real-world examples have shown us what happens when AI bias in healthcare goes unchecked: delayed diagnoses, unequal access to care, and the erosion of trust in medical institutions. These outcomes are not mere statistical anomalies—they are lived realities for patients across racial, gender, and socioeconomic lines. Addressing these issues is not just a technical necessity but a profound ethical obligation.

Fortunately, a growing coalition of researchers, clinicians, policymakers, and patients is pushing for change. By investing in diverse datasets, implementing fairness audits, and strengthening regulatory oversight, the medical community can steer AI innovation toward justice rather than inequity. Ethical design principles must be integrated into every phase of AI development, from ideation to deployment.

AI bias in healthcare examples serve as both warnings and catalysts. They remind us of the dangers of complacency, but they also illuminate a path forward—one grounded in collaboration, transparency, and equity. As we stand on the frontier of medical technology, we must ask not only what AI can do, but what it should do. Only then can we harness its full potential to heal rather than harm.

The conversation about AI bias in healthcare is not just a technical one—it is a human one. And it is a conversation we must continue with purpose, precision, and unwavering commitment to ethical innovation.

Further Reading:

The Uneven Playing Field: Why AI Bias Isn’t a Glitch, It’s a Real-World Crisis Demanding Action