The advent of artificial intelligence (AI) has irrevocably transformed the landscape of medical research, offering unparalleled capabilities to analyze complex datasets, predict disease trajectories, and develop groundbreaking therapies. However, the remarkable promise of AI comes with equally significant challenges, particularly in navigating the ethical issues with AI that arise when these technologies are applied to healthcare and biomedical discovery. As AI becomes increasingly entrenched in medical innovation, it is imperative to examine the nuanced ethical considerations that accompany its use, ensuring that technological advancement does not outpace our ability to safeguard human dignity, equity, and trust.

You may also like: Revolutionizing Healthcare: How AI in Medicine Is Enhancing Diagnosis, Treatment, and Patient Outcomes

Understanding the Scope of Ethical Issues with AI in Medical Research

The scope of ethical issues with AI in medical research is vast and complex, encompassing concerns about data privacy, algorithmic bias, informed consent, and the transparency of AI-driven decision-making. These challenges are not merely hypothetical but have real-world implications for patient outcomes, research integrity, and public trust in medical institutions. As AI systems grow more sophisticated, they are entrusted with decisions that directly impact human lives, heightening the urgency of addressing ethical shortcomings.

One of the primary ethical concerns is the use of personal health data to train AI models. Medical data, by its very nature, is deeply sensitive, and improper handling or unauthorized access can lead to serious breaches of privacy. Furthermore, AI algorithms often reflect and even exacerbate existing biases in the data they are trained on, leading to disparities in healthcare outcomes across different demographic groups. Ethical issues with AI in this context include the potential for discrimination, loss of autonomy, and the undermining of traditional doctor-patient relationships.

Moreover, the opacity of many AI models, often referred to as the “black box” problem, raises questions about accountability and trust. If an AI system makes a flawed recommendation that leads to harm, it can be difficult to determine where responsibility lies. This lack of transparency challenges the ethical foundations of informed consent, as patients and even healthcare providers may not fully understand how decisions are being made.

The Intersection of Artificial Intelligence Issues and Medical Discovery

Artificial intelligence issues in medical research intersect with broader societal concerns about technology and ethics. As AI systems are deployed in clinical trials, drug discovery, and diagnostic processes, the stakes are elevated. Researchers must grapple with the question of how to balance innovation with patient safety and rights.

One pressing artificial intelligence issue is the risk of over-reliance on machine learning predictions without sufficient human oversight. While AI can identify patterns and correlations that might elude human researchers, it lacks the nuanced understanding of context, values, and ethics that human judgment provides. This limitation underscores the need for a hybrid approach where AI augments but does not replace human decision-making.

Additionally, intellectual property rights and the ownership of AI-generated discoveries present novel challenges. When an AI system identifies a potential treatment pathway, who holds the patent rights? These questions complicate the traditional frameworks of research ownership and necessitate new ethical and legal paradigms.

In the realm of public health, the deployment of AI raises ethical concerns about surveillance and autonomy. Predictive algorithms that monitor populations for disease outbreaks or individual risk factors can offer significant benefits, but they also risk infringing on personal freedoms if not carefully regulated. Thus, addressing artificial intelligence issues in medical research requires a holistic approach that considers both technological capabilities and human rights.

Ethical Issues with AI: Bias and Fairness in Medical Research

Bias and fairness are among the most critical ethical issues with AI in medical research. AI models learn from historical data, and if that data contains biases—whether due to systemic inequities, flawed study designs, or underrepresentation of certain groups—the AI’s outputs will inevitably reflect and perpetuate those biases.

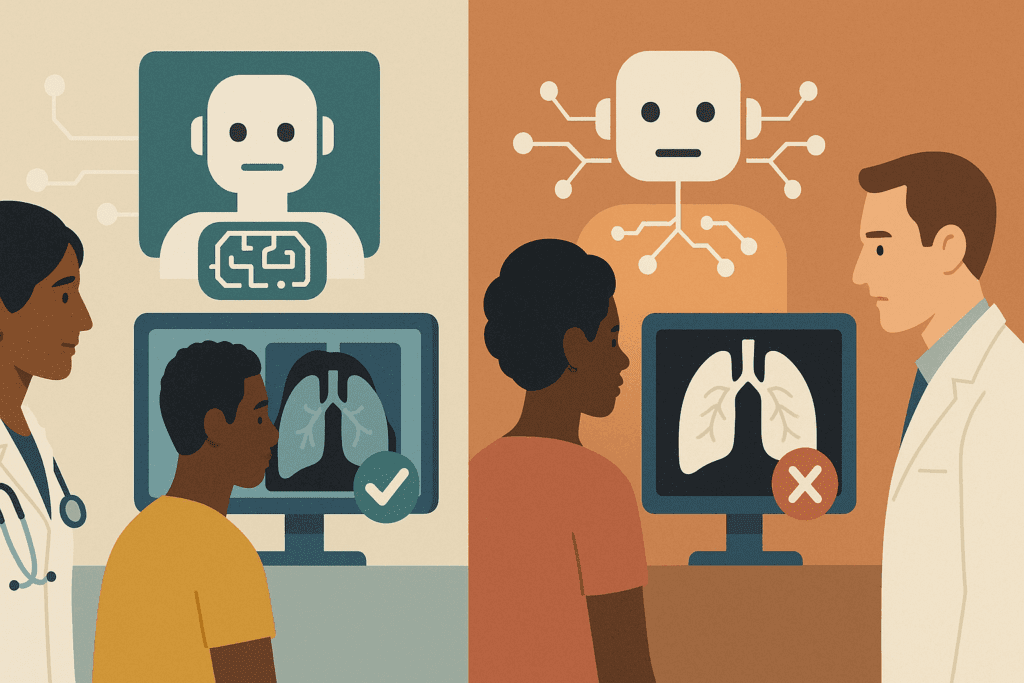

For example, several studies have shown that diagnostic AI systems trained predominantly on data from white patients perform less accurately for patients of color. This discrepancy is not just a technical flaw; it is an ethical failure with potentially life-threatening consequences. Inaccurate diagnoses can delay treatment, worsen health outcomes, and erode trust in the healthcare system among marginalized communities.

Addressing bias requires proactive measures at every stage of the AI development pipeline. Data collection must be inclusive and representative, model training processes should incorporate fairness metrics, and validation efforts must rigorously test for disparate impacts across demographic groups. Furthermore, transparency about the limitations and potential biases of AI systems should be a standard practice, allowing healthcare providers and patients to make informed decisions.

Beyond technical solutions, addressing bias demands a cultural shift in how medical research is conducted. It requires a commitment to diversity, equity, and inclusion, not just in study populations but also among the researchers, developers, and policymakers shaping AI technologies. Only through such comprehensive efforts can we hope to mitigate the ethical issues with AI related to bias and fairness.

Navigating AI Problems in Data Privacy and Consent

Among the myriad ai problems faced in medical research, data privacy and informed consent stand out as particularly thorny ethical challenges. Medical data is uniquely sensitive, and its misuse can have devastating consequences for individuals, including discrimination, stigmatization, and psychological harm.

Traditional models of informed consent are ill-suited to the complexities of AI research. Patients may consent to the use of their data for a specific study, only to have that data repurposed for other AI projects without their explicit knowledge or approval. Moreover, the technical nature of AI models makes it difficult for patients to fully understand how their data will be used, undermining the principle of truly informed consent.

One potential solution is the adoption of dynamic consent models, which allow patients to give ongoing, granular permissions for the use of their data. Blockchain technologies have also been proposed as a means to enhance data security and auditability. However, these solutions must be implemented with care, ensuring that they do not create new barriers to participation for already marginalized groups.

Ultimately, addressing data privacy and consent issues requires a commitment to transparency, respect for autonomy, and the development of robust regulatory frameworks that hold researchers and institutions accountable. Only by confronting these ai problems head-on can we maintain public trust and protect the fundamental rights of research participants.

The Broader Artificial Intelligence Controversy: Trust, Transparency, and Accountability

The artificial intelligence controversy extends far beyond technical glitches and data breaches; it strikes at the heart of societal trust in technology and institutions. In the context of medical research, trust is paramount. Patients must believe that researchers have their best interests at heart, that their data will be handled responsibly, and that AI systems will be used to enhance, not undermine, human welfare.

Transparency is a key component of building and maintaining trust. Researchers and developers must be forthright about the capabilities and limitations of AI systems, avoiding the temptation to overhype results or obscure risks. Explainable AI (XAI) techniques, which aim to make AI decision-making processes more interpretable, represent an important step in this direction. However, transparency must extend beyond technical explanations to include clear communication with patients, healthcare providers, and the broader public.

Accountability is equally critical. When an AI system causes harm, there must be mechanisms in place to identify the root causes and assign responsibility. This includes holding developers, researchers, and institutions accountable for ethical lapses and ensuring that affected individuals have avenues for redress.

The artificial intelligence controversy in medical research thus demands a reimagining of ethical governance. It calls for interdisciplinary collaboration among ethicists, technologists, legal scholars, and patient advocates to create frameworks that uphold human rights and dignity in the age of AI.

Practical Strategies for Addressing Ethical Issues with AI in Medical Research

Addressing the ethical issues with AI in medical research requires more than theoretical discussions; it demands practical, actionable strategies that can be implemented at every stage of the research process. One foundational strategy is the establishment of robust ethical review processes specifically tailored to AI research. Institutional review boards (IRBs) and ethics committees must be equipped with the expertise to evaluate AI-related risks and benefits, moving beyond traditional biomedical ethics frameworks.

Another critical strategy is the integration of ethics-by-design principles into AI development. This approach embeds ethical considerations into the design and implementation of AI systems from the outset, rather than treating them as afterthoughts. It involves interdisciplinary collaboration, participatory design with input from diverse stakeholders, and continuous ethical impact assessments throughout the research lifecycle.

Education and training are also essential. Researchers, developers, and healthcare providers must be equipped with the knowledge and skills to recognize and address ethical challenges associated with AI. This includes not only technical training but also education in bioethics, social justice, and human rights.

Finally, fostering a culture of ethical reflection and accountability within research institutions is vital. Ethical behavior must be incentivized and rewarded, and whistleblowers who raise concerns about unethical AI practices must be protected and supported. By implementing these practical strategies, the medical research community can begin to systematically address the ethical issues with AI and build a more just and equitable future.

What Are the Ethical Issues of Artificial Intelligence in Global Health Research?

When considering what are the ethical issues of artificial intelligence in global health research, it becomes evident that the stakes are even higher. Global health initiatives often involve vulnerable populations with limited access to healthcare resources, making ethical lapses particularly consequential.

One major ethical issue is the risk of “data colonialism,” where researchers from wealthier nations extract data from populations in lower-income countries without providing reciprocal benefits. This practice not only exploits vulnerable communities but also perpetuates global inequities in health and wealth. Ensuring equitable partnerships, fair data sharing agreements, and capacity building in local research institutions is essential to addressing this concern.

Another pressing issue is the applicability and relevance of AI models across diverse cultural and socioeconomic contexts. AI systems trained on data from high-income countries may not perform well in other settings, leading to inaccurate diagnoses, ineffective treatments, and exacerbated health disparities. Ethical AI research must prioritize the development of context-sensitive models that are validated and adapted to the specific needs of different populations.

Finally, the governance of AI in global health research raises complex questions about sovereignty, autonomy, and justice. Who controls AI technologies deployed in low-resource settings? Who benefits from AI-driven discoveries? Ensuring that global health AI initiatives are guided by principles of fairness, respect, and shared benefit is critical to overcoming these challenges.

By thoughtfully addressing what are the ethical issues of artificial intelligence in global health research, the global scientific community can harness AI’s potential to advance health equity and improve outcomes for all.

Emerging AI Problems in Personalized Medicine and Genomics

As personalized medicine and genomics become increasingly reliant on AI technologies, new ai problems arise that demand careful ethical scrutiny. Personalized medicine promises to tailor treatments to individual genetic profiles, but the use of AI to analyze genomic data introduces significant risks related to privacy, consent, and equity.

One of the foremost concerns is the potential for re-identification. Even anonymized genomic data can sometimes be traced back to individuals, posing serious risks to privacy. This risk is compounded when genomic data is shared across research institutions, companies, and national borders, often without robust safeguards.

Another challenge is the potential for AI-driven genomics to exacerbate existing health disparities. If genomic databases primarily include data from populations of European descent, AI models trained on this data may not perform as well for individuals from other backgrounds. This lack of diversity in genomic data not only limits the benefits of personalized medicine but also raises profound ethical questions about justice and inclusion.

Moreover, the commercialization of genomic data and AI-driven insights raises issues of consent and ownership. Patients may not be fully aware of how their genetic information is being used, shared, or monetized. Ensuring transparent, informed consent processes and protecting individuals’ rights to their genetic data are essential steps in addressing these ai problems.

Navigating the ethical landscape of AI in personalized medicine and genomics requires a multidisciplinary approach that brings together geneticists, ethicists, legal experts, and patient advocates. Only through such collaborative efforts can we ensure that AI advances in these fields serve the broader goals of health equity and human dignity.

The Role of International Regulations in Mitigating Ethical Issues with AI

International regulations play a pivotal role in addressing ethical issues with AI in medical research, particularly as research becomes more globalized and data flows across borders with unprecedented ease. Existing regulatory frameworks, such as the General Data Protection Regulation (GDPR) in the European Union, provide important protections for data privacy and consent. However, many of these regulations were not specifically designed with AI technologies in mind and thus may fall short in addressing the unique ethical challenges posed by AI.

The need for international cooperation and harmonization of ethical standards is urgent. Without consistent global standards, researchers may engage in “ethics shopping,” seeking jurisdictions with the least restrictive regulations. This practice not only undermines ethical norms but also erodes public trust in medical research.

Efforts such as UNESCO’s Recommendation on the Ethics of Artificial Intelligence and the World Health Organization’s guidance on AI in health represent important steps toward establishing global ethical norms. These initiatives emphasize principles such as transparency, accountability, inclusivity, and respect for human rights. However, translating these high-level principles into enforceable regulations remains a significant challenge.

A truly effective international regulatory framework must be dynamic, capable of adapting to rapid technological advancements, and inclusive of diverse cultural, socioeconomic, and political perspectives. It must also prioritize the voices and needs of traditionally marginalized and underserved communities to ensure that AI-driven medical research promotes global health equity rather than exacerbating existing disparities.

By fostering international collaboration and establishing robust, enforceable standards, the global community can address ethical issues with AI more effectively and ensure that medical research benefits humanity as a whole.

Addressing AI Issues in Clinical Decision Support Systems

Clinical decision support systems (CDSS) powered by AI have the potential to revolutionize healthcare by assisting clinicians with diagnosis, prognosis, and treatment planning. However, these systems also introduce significant ai issues that must be carefully managed to protect patient welfare and uphold ethical standards.

One major concern is the risk of automation bias, where clinicians may place undue trust in AI-generated recommendations, even when they contradict clinical judgment or patient preferences. This phenomenon can lead to suboptimal care and disempower patients in decision-making processes.

Another critical issue is the explainability of AI-driven CDSS. If healthcare providers cannot understand how an AI system arrived at a particular recommendation, it becomes difficult to assess its validity or communicate its rationale to patients. This lack of transparency undermines informed consent and shared decision-making, core principles of ethical medical practice.

Moreover, AI-driven CDSS must be rigorously validated to ensure their safety and effectiveness across diverse patient populations. Validation studies should not only assess overall accuracy but also examine performance across different demographic groups to identify and mitigate potential biases.

To address these ai issues, healthcare institutions must implement comprehensive training programs that educate clinicians about the strengths and limitations of AI tools. Regulatory bodies should establish clear guidelines for the development, evaluation, and deployment of AI-driven CDSS, emphasizing transparency, accountability, and patient-centered care.

By proactively addressing the ethical and practical challenges associated with AI in clinical decision support, the medical community can harness the benefits of these technologies while safeguarding patient trust and well-being.

Future Directions: Ethical AI Innovation in Medical Research

Looking ahead, the future of ethical AI innovation in medical research hinges on the ability to integrate ethical considerations seamlessly into the research and development lifecycle. This proactive approach must move beyond reactive fixes to embed ethical reflection into the DNA of AI projects from inception.

One promising direction is the advancement of interpretable and explainable AI models that prioritize transparency without sacrificing performance. Research into XAI techniques continues to evolve, offering hope for AI systems that clinicians and patients can understand and trust. By making AI decision-making processes more transparent, we can empower users to critically evaluate recommendations and maintain a human-centered approach to healthcare.

Participatory research methodologies also hold great potential. Engaging patients, healthcare providers, ethicists, and community representatives in the design and evaluation of AI systems ensures that diverse perspectives inform development and helps align AI tools with the real-world needs and values of those they are intended to serve.

Additionally, fostering a vibrant ecosystem of interdisciplinary research and collaboration is vital. Bringing together expertise from computer science, medicine, ethics, law, and the social sciences can catalyze innovative solutions to the complex ethical issues with AI. Such collaboration encourages holistic thinking, recognizing that technological, social, and ethical dimensions are inextricably linked.

Investment in ethical AI research must be prioritized by funding agencies, governments, and private sector stakeholders alike. Dedicated funding streams for projects that explicitly address ethical challenges can accelerate progress and signal a strong commitment to responsible innovation.

By embracing these future directions, the medical research community can lead the way in demonstrating that ethical excellence and scientific innovation are not mutually exclusive but mutually reinforcing.

Frequently Asked Questions About Ethical Issues with AI in Medical Research

1. How are ethical issues with AI impacting the doctor-patient relationship?

The introduction of AI into clinical decision-making processes is subtly reshaping the traditional doctor-patient relationship. Many patients may feel distanced when treatment decisions are heavily influenced by algorithms rather than personal clinical judgment. This evolution challenges the historical notion of personalized care, as AI systems prioritize data-driven recommendations that may overlook individual patient nuances. Furthermore, when ethical issues with AI lead to opaque decision-making, it becomes harder for doctors to fully explain the rationale behind treatment choices, possibly weakening patient trust. Moving forward, integrating AI as a supportive tool—rather than a dominant decision-maker—will be key to preserving the empathy and individualized attention central to effective medical care.

2. What emerging trends are shaping solutions to AI problems in medical research?

Innovative frameworks like federated learning are beginning to offer promising solutions to persistent ai problems, especially regarding data privacy and inclusivity. Federated learning allows AI models to be trained across decentralized data sources without the need to centralize sensitive patient data. This emerging trend could address many ethical dilemmas by reducing the risk of data breaches while still benefiting from diverse datasets. Another growing trend is the use of “bias bounties,” where researchers are financially rewarded for finding and reporting biases in AI systems. By blending financial incentives with ethical oversight, the industry hopes to create more transparent and equitable medical research outcomes.

3. How do artificial intelligence issues affect research funding priorities?

Artificial intelligence issues are increasingly influencing how funding bodies allocate resources in medical research. Agencies are now demanding detailed ethical risk assessments alongside technical feasibility studies before granting AI-related research funds. This shift reflects a recognition that overlooking ethical risks can lead to costly project failures, public distrust, or even legal battles. Consequently, research proposals that actively address ethical concerns—such as fairness, transparency, and data protection—are more likely to secure competitive funding. Long-term, researchers who proactively tackle these artificial intelligence issues will find themselves better positioned to lead future scientific advancements.

4. What are lesser-known ethical concerns beyond bias and privacy?

While bias and privacy dominate discussions about ethical issues with AI, subtler problems are emerging as AI penetrates deeper into medical research. One underexplored concern is the “deskilling” of healthcare professionals, where reliance on AI might erode clinicians’ diagnostic and decision-making abilities over time. Another overlooked issue is emotional detachment: AI recommendations, by being perceived as “objective,” could discourage clinicians from applying their emotional intelligence when interacting with patients. Lastly, “algorithmic paternalism”—where AI systems override human preferences “for their own good”—raises profound questions about patient autonomy and dignity, signaling the need for more nuanced ethical frameworks.

5. Why is the artificial intelligence controversy particularly intense in genomics?

The artificial intelligence controversy in genomics stems from the field’s inherently sensitive nature and high stakes. AI-driven genomic analysis involves processing massive datasets that can reveal deeply personal information, from predispositions to diseases to ancestral origins. Unlike other types of health data, genomic data can also implicate family members who have not consented to data sharing, intensifying ethical complexities. Furthermore, commercial interests in genetic data create tension between profit motives and patient rights. This unique convergence of privacy risks, familial impacts, and commercialization makes genomics a focal point for ongoing debates about responsible AI use.

6. How can researchers minimize ai issues when developing diagnostic tools?

Researchers can minimize ai issues in diagnostic tool development by adopting a multi-pronged, proactive approach. One key strategy is “algorithmic auditing,” which involves independent third parties rigorously testing AI systems for hidden biases and vulnerabilities before clinical deployment. Additionally, integrating continuous feedback loops—where AI systems learn from real-world clinical outcomes—can improve system robustness and equity over time. Researchers should also prioritize explainable AI designs to ensure clinicians understand and trust the tools they are using. Finally, early and frequent engagement with diverse patient populations during the design phase ensures that diagnostic AI tools are inclusive and ethically sound.

7. What are the long-term psychological effects of overreliance on AI in healthcare?

Overreliance on AI in healthcare could subtly but profoundly reshape human psychology, particularly within the clinician community. Constantly deferring to AI recommendations may diminish clinicians’ confidence in their own expertise and critical thinking abilities over time. This psychological phenomenon, known as “automation complacency,” has already been observed in industries like aviation and could similarly impact medical fields. Moreover, patients may develop unrealistic expectations, believing AI systems are infallible and losing faith in human-led care when discrepancies arise. Recognizing these risks early is essential to developing AI systems that enhance, rather than undermine, human skills and resilience.

8. How does public perception of ai problems influence medical innovation?

Public perception of ai problems exerts a significant influence over the pace and direction of medical innovation. High-profile failures, scandals, or misuses of AI technology can provoke widespread skepticism, leading to more stringent regulations and slower innovation cycles. Conversely, when the public perceives that AI is being developed transparently and ethically, acceptance grows, and funding flows more freely into cutting-edge projects. To foster positive perceptions, researchers must prioritize open communication, acknowledge limitations, and demonstrate a genuine commitment to addressing ethical concerns. Ultimately, a well-informed public can serve as a powerful ally in steering AI toward socially beneficial outcomes.

9. Addressing Ethical Issues with AI: What Can Patients Do to Protect Themselves?

Patients are not powerless in addressing ethical issues with AI and can take active steps to protect their rights. First, they should ask healthcare providers whether AI tools are being used in their care and request understandable explanations about how these tools influence decisions. Patients should also inquire about how their data is being stored, used, and shared, insisting on transparency and seeking dynamic consent opportunities when available. Additionally, participating in advocacy groups that champion data rights and ethical AI development can amplify patient voices in shaping future regulations. An informed, proactive patient community is a critical safeguard against potential abuses of AI in healthcare.

10. Understanding What Are the Ethical Issues of Artificial Intelligence in Cross-Border Research

Understanding what are the ethical issues of artificial intelligence in cross-border medical research is crucial as collaborations become more globalized. Differences in privacy laws, cultural norms, and healthcare standards can lead to ethical inconsistencies when AI models trained in one country are deployed in another. For example, a diagnostic AI developed using datasets from Western countries may fail spectacularly when applied in rural regions of developing nations. Moreover, questions about data sovereignty—who owns data collected from citizens—complicate matters further. Addressing these ethical challenges requires international cooperation, culturally sensitive AI designs, and binding global agreements that prioritize equity and respect for diverse populations.

Conclusion: Embracing Ethical Stewardship in the Age of AI

The transformative potential of AI in medical research is undeniable. Yet, this potential can only be fully realized if it is accompanied by a steadfast commitment to ethical stewardship. Addressing the ethical issues with AI is not merely an academic exercise; it is a moral imperative that demands courage, creativity, and collaboration.

Throughout this exploration, we have examined the multifaceted ethical challenges posed by AI, including artificial intelligence issues related to data privacy, bias, transparency, accountability, and global health equity. We have seen how ai problems such as automation bias and data re-identification threaten to undermine the very trust that medical research depends upon. We have delved into the broader artificial intelligence controversy and reflected on what are the ethical issues of artificial intelligence in diverse research contexts.

The path forward requires a holistic, interdisciplinary approach that centers human dignity, rights, and well-being. It requires robust international regulations, participatory design processes, transparent communication, and a relentless commitment to equity and justice. It requires us to recognize that the ultimate goal of AI in medical research is not simply to optimize efficiency or profitability but to advance human flourishing.

By embracing ethical stewardship as a guiding principle, the medical research community can harness AI’s transformative power to create a healthier, more equitable, and more compassionate world. The challenges are formidable, but so too is our capacity for ingenuity and moral leadership. In the end, the true measure of our success with AI will not be the sophistication of our algorithms but the depth of our humanity.

Further Reading

The Ethical Considerations of Artificial Intelligence

Ethical concerns mount as AI takes bigger decision-making role in more industries